QN#12 Closing the MMM application gap

With the explosion in investment in media effectiveness measurement tools, specialists, and solutions, the need to prove results arises early in the process. Are the recommendations from all these analytics projects driving higher revenue? Higher ROI? Many projects fail, and the main reason lies in the inability to turn outcomes into decisions that generate business impact.

For those who have run these projects before, the harsh reality is clear: driving change is hard. Expensive and exhausting MMM projects often lead to extensive debrief sessions but ultimately lose momentum. Months later, how much real change do these projects deliver? Why do they fail to "move the needle"?

The soft element

Effective researchers need to recognize that they are in the business of driving change. It’s not just about the results—countless articles and books have already covered that. In this newsletter, we aim to highlight some elements that have worked well in our previous projects:

Having the right stakeholders and sponsors: This means not just sufficient seniority but also ensuring a broad representation across teams.

Asking the right business questions: Collect business questions but avoid a "shopping list" approach. You may find that your stakeholders think in different terms, but MMM is often flexible enough to adapt its modeling to answer the key questions.

Shifting from a “project” to a “process” mindset: A project gets delivered, and people move on. A process is ongoing, with research serving as one component of a continuous loop.

Making stakeholders “sweat for it”: Encourage stakeholders to contribute data and business context. This not only leads to better outcomes but also ensures they have skin in the game.

Avoiding “insulting” recommendations: An “insulting recommendation” is one that, even if supported by data, is too detached from reality. It can make you appear like a brilliant mathematician disconnected from the business world.

Precision is not the ultimate KPI: The goal of MMM is to drive ROI improvements, not just to create perfectly precise explanations of past performance.

Allowing enough time for discussion during debriefs: Obvious but often overlooked, robust debate is critical for gaining alignment and ensuring actionable outcomes.

The hard element

Good recommendations must be translatable into actionable steps for the people implementing them. Often, MMM outputs are difficult to translate into clear, practical decisions. What do we mean?

Budget shifts are not simple: Today, a large portion of media purchasing is automated. For instance, increasing or decreasing investment in generic paid search requires navigating multiple options. Additionally, MMM models rely on past performance, but automated media buying adjusts dynamically. Changes in how competitors bid for the same keywords can drastically alter response curves.

Small changes often matter most: Drastic changes based on MMM outputs can invalidate the model itself, as the context in which it was built shifts. Test large changes in a controlled environment to validate the direction suggested by the MMM.

Look at further implications of the changes: A common MMM recommendation might be to reduce GRPs per week and redistribute them to avoid blank weeks. However, this can reduce Share of Voice (SOV) to levels that media agencies and brand managers might consider unsafe. Understanding these nuances is critical.

These are just a few examples on why making the most of a MMM is far from guaranteed and is often not achieved in the first iteration. Resilience and iteration is a must when a project seems to be failing. In marketing measurement as in many other fields, failures must lead to learnings and future success, or total ROI improvements.

MMM innovations may be of help

Methodological improvements are intellectually satisfying but often result in only marginal increases in a project’s real-world impact. However, some improvements are particularly valuable—those that close the gap between the model’s outputs and actionable steps.

For example, modeling Reach and Frequency and going beyond Impressions. Media planners and buyers often work with reach and frequency metrics, so presenting results in impressions (even if converted to GRPs) creates an unnecessary barrier to action.

Traditionally, MMM outputs have been hard to navigate, static, making it challenging to update findings with new data. Today, many providers offer interactive dashboards that integrate data from multiple sources, update results regularly, and provide forecasts and scenario planning. For many organizations, this shift makes MMM outcomes significantly more actionable.

The success of MMM projects doesn’t rest solely on the quality of the model or the accuracy of the data. It relies on the ability to drive organizational change and translate recommendations into actions that stakeholders can realistically implement.

Industry updates and upcoming events

Is pursuing mental availability a safe bet?

Felipe Thomaz has openly critized Byron Sharp’s insights in a recent interview in Mi3 (link). He has conducted a recent research with Kantar and Wavemaker that, according to him, proves that pursuing mental availability through mass reach is not a safe bet. The research full research will be published in 2025

New WARC report on “The holistic path to measuring media investments”

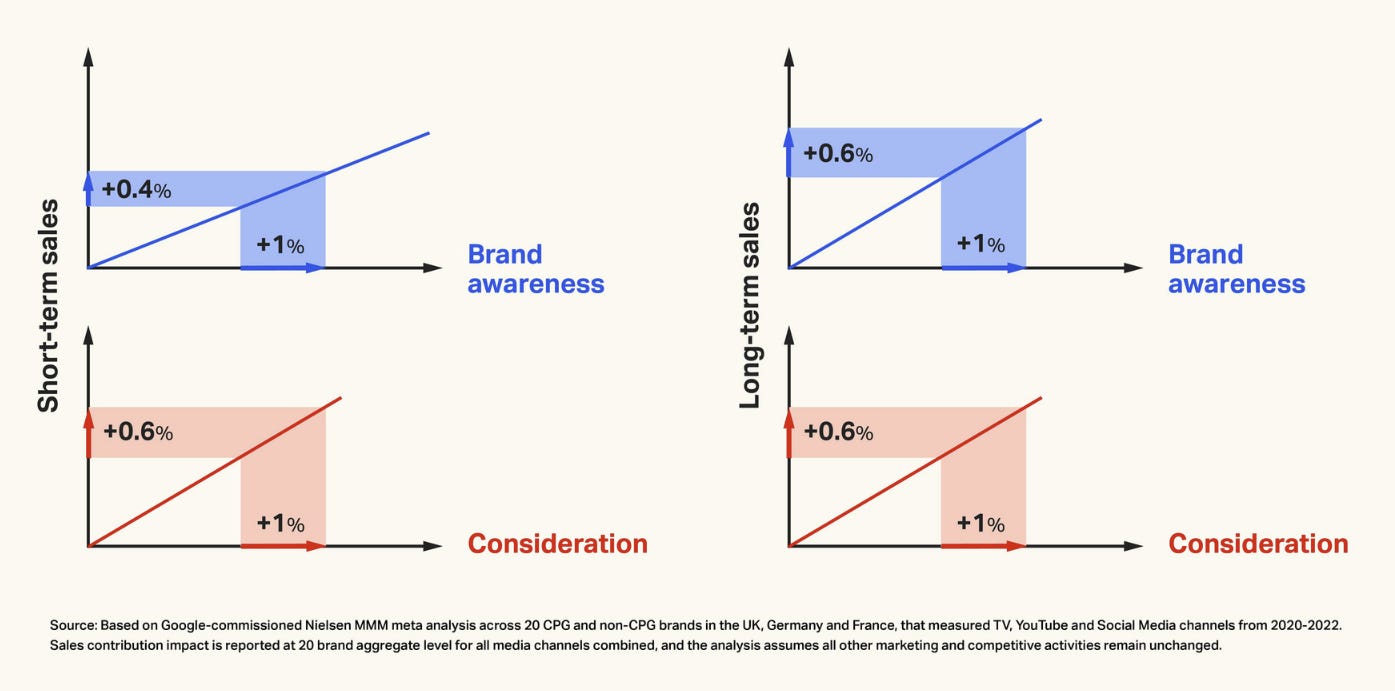

Google’s Michal Protasiuk and Ahmet Bas have published a recent report in partnership with WARC, based mostly on Nielsen MMM (with some Ipsos MMM, too). The research quantifies the link of brand equity metrics with short and long term sales. It also reconfirms the 60/40 rule of branding/performance. This WARC report is open to the public and you can read it on this link.

The next chapter of the measurement providers saga

Interesting move on the MMM-vendors space: NielsenIQ has launched MMM services. The evolution of the Nielsen saga is pretty complex. What was one company, in 2020 got split into Nielsen Media and NielsenIQ. At first, Nielsen Media kept the MMM capabilities and NielsenIQ focused on sales/household panels. Later NielsenIQ purchased GFK, also rebranded to NIQ. In the meantime Nielsen Media rebranded itself as “Nielsen”. In this latest move, NIQ enters the MMM space, effectively becoming a competitor to Nielsen Media. More details on this press release.

Do not miss: Meta EMEA Measurement Forum

Meta has recently held their 2024 Measurement Forum in EMEA. This is a strong signal of commitment of Meta towards measurement in general and MMM in particular. You can check the recordings here. It’s clear that Long Term ROI and brand building are coming into the mainstream. They also share a Kantar meta analysis on creative best practices (in QN10 we discussed about that topic).

Chart of the week

Are you into Padel or Pickleball? Both of those sports are exploding in popularity, with a really interesting geographical divide.

Oldies but goodies

Les Binet is the Group Head of Effectiveness at adam&eveDDB and has become an essential figure in marketing effectiveness, challenging marketers to think differently about the balance between long-term brand building and short-term sales activation. He is also very active on LinkedIn where through his profile he shares very insightful content about effectiveness. His work with Peter Field, captured in The Long and the Short of It, exposes a critical tension: the constant need for immediate results versus the patience required to build sustainable growth.

The essence of Binet’s message is clear: short-term activation campaigns (promotions or performance marketing) can deliver a quick win, but they rarely strengthen a brand in the way long-term efforts do. Brand-building campaigns, designed to evoke emotion and create lasting associations, take longer to pay off but are essential for profitability in the long run.

This is a conversation we have held with many marketers, often agreeing the following: because short term results seem easier to measure and can be reported to management in an easy way, companies have a strong bias towards them. This generates an increasingly higher and long lasting dependency on performance marketing. Also, these companies have fallen into a trap which is very hard to escape from.

The effectiveness data in the book are derived from the IPA Effectiveness Databank – the product of 30 years of the IPA Effectiveness Awards covering more than 700 brands in over 80 categories.

For those of us obsessed with measurement, this creates a challenge. Tools like attribution models and performance focused MMMs are often better suited to measure short-term performance, reinforcing a bias toward immediate ROI. But over-focusing on the short-term risks trapping businesses in a spending treadmill, where results disappear as soon as the budget is paused. At the same time, ignoring short-term performance can lead to impatience and missed opportunities.

Binet’s principles are more relevant than ever. The question is: how can we measure and balance both horizons? While data-rich environments make short-term results easier to quantify, we must push measurement tools to accommodate the bigger picture, too. That’s where frameworks and discipline on the measurement methodologies can help. Not just to measure what’s working now, but to guide sustainable, long-term growth. Data from the research also shows that in order to obtain maximum effectiveness, “brands should spend around 60% of their budget on brand-building activity and 40% on activation”

In previous editions of Quantified Nation we have discussed about branding metrics and how to link them into business results or the value of nested MMMs, which capture better the long term effect of marketing. Also, Share of Search is suggested as a proxy for share of voice to understand the degree to which a brand is top-of-mind for consumers.

There are many learnings in this book with valuable examples, research results and easy to digest conclusions. Go ahead, send it to your e-reader, get a copy or print the pdf. You will always be in a better position as a marketer if you read this book.